Project EXPLAINER

EXPLAINER : REVEALING THE HIDDEN PARTS OF ARGUMENTS

EXPLAINER : REVEALING THE HIDDEN PARTS OF ARGUMENTS

About the project

When people argue, they do not always state every step of their reasoning. Some parts remain implicit, relying on knowledge or beliefs assumed to be shared. An enthymeme is precisely such an incomplete argument, in which a premise or a conclusion is left unstated. Humans use this form of reasoning naturally, but for artificial intelligence methods it represents a major challenge.

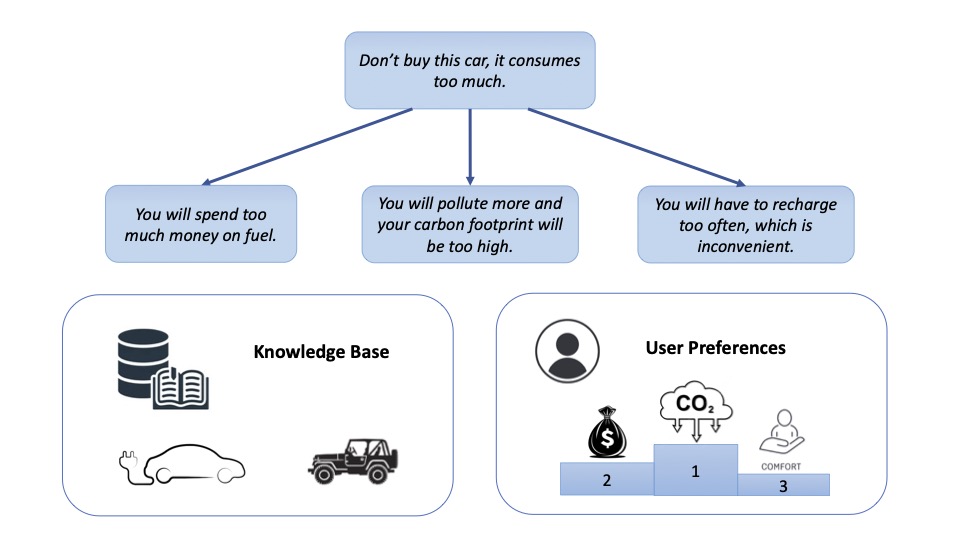

Take an example. Someone says: “Don’t buy this car, it consumes too much.” At first glance, the argument seems clear, but it is ambiguous: too much in what sense? Several possible decodings emerge:

“You will spend too much money on fuel.”“You will pollute more and your carbon footprint will be too high.”

“You will have to recharge too often, which is inconvenient.”

Without explicit clarification, it is difficult to know which reasoning is actually intended. The enthymeme therefore leaves an uncertainty that decoding seeks to resolve.

Current approaches in artificial intelligence can generate arguments or simulate debates. However, they face persistent difficulties when dealing with the implicit: they often miss essential assumptions or, conversely, invent unfounded ones. Their results then lose reliability, transparency, and credibility.

The EXPLAINER project, a one-year postdoctoral fellowship supported by the RISE Academy and led by Victor David in collaboration with Anthony Hunter and Serena Villata, aims to develop methods for decoding enthymemes: making implicit parts of reasoning visible and evaluating the quality of the reconstructions produced.

A central objective will be the creation of a dataset of debates, designed to include targeted categories of enthymemes. Rational or commonsense forms already pose a major challenge, while more complex ones—such as those linked to psychology or human behavior—will be left for future work. These debates will be defined in a controlled way to reflect such difficulties, providing a robust ground for exploring different ways of completing implicit reasoning and comparing the quality of the resulting decodings.

Beyond this dataset, EXPLAINER will also develop methods to reconstruct missing steps in reasoning and to assess whether reconstructions are accurate, coherent, and useful.

Why is this crucial? Because enthymemes are everywhere: in political debates, legal reasoning, everyday discussions, or online exchanges. For AI methods to contribute meaningfully in such contexts, they must learn not only to generate arguments but also to make explicit what humans usually leave implicit. Only then does reasoning become truly understandable and verifiable.

By tackling this challenge, EXPLAINER will lay the foundations for an approach to AI that does not simply generate text, but that explains its reasoning. With both a reference dataset and an innovative methodology for handling implicit content, the project aims to take a decisive step toward more transparent and trustworthy artificial intelligence.

- Principal investigators

- Victor David (Inria, i3S)

- Project partners

-

- Anthony Hunter (UCL)

- Serena Villata (CNRS, i3S)

- Duration

- 12 months

- Total amount

- 60k€